Java 9 Modularity - First look

Hi, I am Malathi Boggavarapu working at Volvo Group and i live in Gothenburg, Sweden. I have been working on Java since several years and had vast experience and knowledge across various technologies.

Java 9 Modularity - First look

Module system is one of the biggest changes to java ever in it's 20 years of existence because modular system does not only effects the language by the new keywords and new features but it also effects the compiler. The compiler needs to know how to translate new features and need to know the module boundaries to enforce them at compile time. Then after the compiler is done, all the method data of the modules is preserved in binary format and loaded by virtual machine. The JVM too should know about modules and modular boundaries and uses this information to ensure reliable configuration of modules together and to enforce modular boundaries again.

Tooling needs to change as well for modular system. Think of IDE's and libraries, these too need to be adapted to the new modular reality. So if we consider all these things, it is not strange that java9 took such long time to release.

Why is the module system added to java at first place. it turns out the first reason to add module system to java library is rather selfish.

1) It is to modularize the JDK itself. Since java runtime libraries and jdk are 20 years old, we can imagine there is much technical depth in there. Modularizing the platform itself is one of the ways to address this technical depth. Strange dependencies would be untangled and make jdk maintainable again.

2) Ofcourse if modular system is added to jdk, developers can build modular applications as well. The same module system that is used to modularize jdk can be used to modularize own applications.

But keep in mind, using the module system is optional. while moving to java9, we can keep using the java the way we use it or we can chose to adopt module system and make our systems more maintainable as well.

In both the scenarios, however you are still running on the top of modularized jdk. This can effect your application code even you choose not to use modular system

What is modularity and why is it important?

To answer the question, we first have to look how java currently works and what's wrong with that. Something that java developers intermittently are familiar with is the classpath. Classpath would be very very large with out any structure.1) How do you know if the classpath is complete? Are all the jars are there to run the application? You won't find out until running the application and triggering the code path which will try to load any missing class from the missing jar in the classpath. And this may happen very well in the production where some user just clicks a button that leads to code path which will try to load the missing class from missing library. Its bad and it should not happen.

2) The second problem is although it looks like you have nice classpath with all these individual jars in them there is no separation between jars at all. when JVM starts, it puts all the classes from classpath in a big long list and starts loading from the list. We already treated the case about missing jars. But what if we have multiple jars with conflicting versions of classes? JVM will just randomly pick the first one it encounters in the classpath. Also any class in the classpath can access other classes in the classpath, so even though you have your application in separate jars, some sought of malicious third party library could in principle just access the code. There is no notion of Encapsulation.

All and all you can say that classpath is just a bowl of spaghetti. Its ok when it works, looks delicious but when something is wrong you seriously can't see what's going on. Clearly there is much room for improvement there. But the improvement should not only destined for the java runtime, its for code time and design time where we create applications. We all know about those applications which just grow and grow with out any clear boundaries and clear responsibilities. People call them as monolithic applications. How do you prevent that? Modularity is one of the answer to this question.

If you have a monolithic application, it is hard to understand. New people joining the team will have a hard time understanding to look in code what to do and how to work with it. It leads to codebase that has decreased maintainability. It means that if you fix bug somewhere it is really hard to see what other parts of code fails. We all know the stories of just fixing the small bug effecting the whole different part of application. At the end these all lead to application that is very rigid, inflexible and resistant to change.

So Modularity is the ultimate agile tool. It helps you to create flexible applications. They are understandable that have clear separation of modules that are generally easier to work with.

What is module?

On the high level, module has a name, it groups related code, this code can either encapsulated inside of module or it can be visible to outside of module. Module is self-contained. It contains everything it needs to perform its functions and if does not contain everything it needs, it will have explicit definition of what other modules it needs to do its job.Practically if we see, we break the application down into small modules and make the application manageable. Ofcourse if you divide application into modules, you need clear interfaces between modules. It helps you to think about what are the APIs of the different parts of application. Moreover each of these modules can choose to hide some implementation details and only expose those clear interfaces that can be used by other modules. This way if we change one of the module implementation details, the other modules are not impacted. Finally if we have an application consisting of multiple modules, and each of those modules explicitly states which other modules they want to use then we have a notion of reliable configuration.This means all those explicit dependency information can be used both at compile time and runtime to verify whether all the dependencies are in place.

Now this is so much better than what we saw in classpath situation where we only detect problem at runtime.

Three tenets of Modularity

1) Strong encapsulation - If the module can encapsulate the internal implementation details, it allows module to change internally without effecting any of the other modules which use it. This is very important to develop robust applications.2) There is also the other side of the coin for strong encapsulation. Because if the module encapsulates everything then no other module can meaningfully use it. So it has to have some public and well defined interfaces as well. These are used for interaction between modules and they should be stable.

3) Finally explicit dependencies play a big role in modular systems. Modules exactly know which other modules it need.

Java modular system addresses each of those tenets of modularity

Before Java9 jdk

Whenever we run the java application, we run it on java runtime library which is rt.jar and its one huge library containing all possible classes from java platform that your application may use. This ranges from collections classes where everybody uses, Strings, Objects, the basic types, RMI classes and many more BUT many applications don't use some of the classes from jdk but still they are part of one big rt.jar. This one big rt.jar is over 20 years old. You can probably see how does it has grown into a big and have tangled classes. It's just not very clear "what depends on what" inside of this jar. This is not the problem for application developers, it is actually a problem for jdk developers because having so many entangled classes in one huge library makes it very hard to evolve the platform.Can you imagine making a change to java runtime libraries? There are literally 10's of 1000's of classes inside this rt.jar and changing one class somewhere is bound to have impact in place even you don't know about it. And changing or adding stuff to runtime may even be the easy case. what's really hard is to remove and deprecate code from this big rt.jar. Java has always been very big on backward compatebility.

In summary the situation for jdk runtime libraries is pretty sure and it wasn't about to get better

Fortunately the modularization of jdk offers a way forward.

The picture painted here about jdk runtime library may remind you of your own application. It may be a big module as well with many unknown dependencies where changing one part may effect unknown other parts where it is hard to evolve for change. If so, you will probably appreciate what you see in the next picture because a modularized jdk shows how you can modularize your own application.

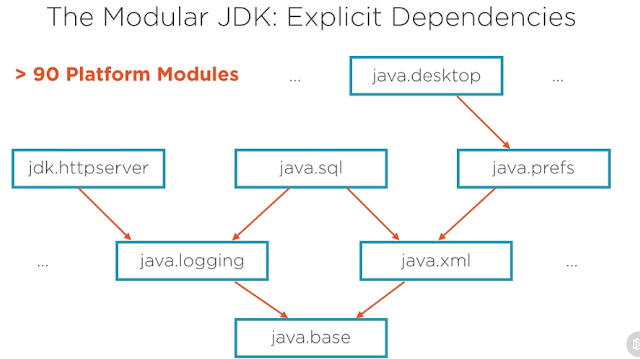

We will see here as a diagram of modular jdk in java9.

It contains only a few modules. This diagram is so called a dependency graph. every box is a module and a box have an arrow to other modules. It means it needs other module to perform its functions.

The most important module is java.base at bottom of diagram. java.base is the module where every other module always depends on. This is because it contains foundations of classes like java.lang objects, java.lang.Integers and so on. Every module implicitly depends on java.base.

We see that java.logging and java.xml have explicit arrow on java.base. We do that if java.base is the only dependency of the module which is the case for java.logging and java.xml.

java.sql module uses java.logging which makes sense because it may have some internal logging to do and it uses java.xml moule which may be bit more surprising but is there to handle the xml capabilities of some databases. By looking at the modules and their explicit dependencies in the diagram, you already know much much more about what is there in the jdk than you would when looking at rt.jar like previously. Here we have clear picture what kind of functionality is in jdk and what are the dependencies between different modules offering this functionality.

So if we apply this modular functionality in our own application, they will become understandable as this picture as well.

How are modules defined

Modules are defined by Module descriptors. So let's have a look at an example module descriptor java.base. Now looking at the module descriptor, it lives in a file called module-info.java. The most important part of module-info.java is the declaration of the name of the module. In this case it use module keyword following with the name java.base.In the body of the module declaration, we can have additional statements describing more info about module itself. In case of java.base we know there are some packages that need to be public. Now by default any of the packages are not public they can not be accessible by other modules. If you want to give other modules accessible to some packages we should export them.

See the picture below for clear view

Note there are no statements referring to any internal packages. This is because by default any package that is not listed as exported package is encapsulated in a module and not accessible by other modules.

Now we will see one more module descriptor for java.sql module.

java.sql exports few packages as you see above and dependencies of java.sql is marked using requires. There is big difference between exports and requires statements. exports statement takes package name as parameter whereas requires take module name as parameter.

Creating your First module

See the below screenshot where you find a project 1_EasyText_SingleModule and easytext is the module with in the project. The easytext module contains Main.java class and module-info.java files.Main.java is the normal java class and module-info.java describes the module.

If you look at Main.java file, you can see import statements and all those import statements are exported by java.base module.

And for moule-info.java file, we should add all the information required to describe the module.

module easytext{

requires java.base

}

Actually we no need to add java.base because it will be implicitly defined for all the java classes. But there is no danger to add java.base to the module-info.java file. So we will just keep it anyway.

Now if we want to compile the module, open the console and do the following

javac --module-source-path src -d out $(find . -name '*.java')

--module-source-path is the new option for compiling java sources inside the modules. So here all the java files of all the modules will be compiled that exists inside the src folder.

out is the output directory where all the java classes are compiled and saved.

we should always have to list all the java source files that should be compiled by the java compiler. So we used regex kind to find all the java files.

In order to execute Main class we use the following command. Main class will be executed and output is printed in the console.

java --module-path out -m easytext/javamodularity.easytext.Main

Working with Modules

In this section we will see how to modularize easytext further. Previously we have single module containing all code for easytext. Now we want to split it up into two modules.The first one is easytext.cli and second one is easytext.analysis. cli module where cli stands for command line interface handles all the interactions with the command line; reading the file, reading the arguments and then it passes the parse text to analysis module.analysis modules contains all logic for calculating the text complexity. Once the analysis module is done, it returns the metric to the cli module where it can be printed to the command line. So in this case we can see the clear difference between two modules. cli module requires and needs analysis module in order to work.

Lets see the example of application which have two modules

Inside the module easytext.cli, in the last println statement, , we create an object of FleschKincaid() which is located in a different module easytext.analysis.

So now, we should update module-info.java for each of the module.

easytext.cli module-info should look like below

module easytext.cli{

requires easytext.analysis

}

And easytext.analysis module-info should look like below

module easytext.analysis{

exports javamodularity.easytext.analysis

}

If we do not add exports statement in above file, cli module could not access any of the classes from analysis module and java compiler throws exception while compilation because of strong encapsulation. If we add exports statement then all the classes within the package analysis of the analysis module is exported and could be used by cli module.

Please note, we have one more package called "internal" inside analysis module. Since we have not added the package in the exports statement in the module-info, it will be enacapsulated and not be exposed for other modules to use.

Now we compile all the modules at once using the below command

javac --module-source-path src -d out $(find . -name '*.java')

java -mp out -m easytext.cli/javamodularity.easytext.cli.Main

Readability

In above picture, easytext.cli reads easytext.analysis module. In order to read analysis module, module descriptor file of easytext module should have requires statement and analysis module should export the packages. It is clearly said above with an example. But readability is not transitive. We will discuss it now in more detail

Readability is not Transitive

In the above picture, easytext have access to read analysis module and analysis module in turn have access to read library module. But easytext module could not read library module even though there is transitive relation between the modules. So we can say that readability is not transitive.

Implied readability

If we see the below usecase, we have an application that requires java.sql module and java.sql module in turn requires java.logging and java.xml modules.

Because readability is not transitive as said above; it means in this case though application could read java.sql, it can not read java.logging and java.xml modules. Because of this reason when we compile the application, compiler throws exceptions.

So what should we do now? Don't worry, we have "requires transitive" statement to use. So how does our module-info files should be changed now??

Well, there will not be any change in the module descriptor of application. But in the module descriptor of java.sql module, we use "requires transitive" statement instead of "requires" on java.logging and java.xml inorder to provide implied readability for application module. If we just use requires statement to read java.logging and java.xml, compiler throws exception while the application is accessing the services of java.sql.

Aggregator modules

Lets say we have a library with 3 independant modules. May be you split it up this way because it is easy to maintain the library or may be it is just logical way to divide the library into parts. But here it is we have Library part1, Part2 and Part3

Now if somebody wants to use your library from application module, the question is which library part do i require? Do i require all of them or single one and ofcourse you can document this choices along with library but it might not be an efficient way to do.

In some cases i just want to use a library without wanting to know about its individual parts, then we can use Aggregator modules functionality

As shown in above picture, we aggregate all the libraries part1, part2 and part3 to single module called Library and use "requires transitive" statement on all the library parts. If you notice there is no code to export from Library module. And the application just use Library module to access required libraries.

But it is always good to know which library part you want to use because if you use only part1 then part2 and part3 are unnecessary dependencies in the application and abviously this Aggregator modules feature breaks the main usage of modularity system. In order to efficiently use this feature, it is always good to know which libraries the application actually uses and add only those libraries to the module descriptor file.

Platform Modules

In this section we make use of platform modules such as java.base, java.logging etc

So now we are going to add a new module easytext.gui which provides the gui for the application.

In the above picture on the left side if you see, we have a new module called easytext.gui. It has a Main class which imports many javafx classes and extends class Application from javafx. Javafx will launch the application using launch method defined inside the main method.

We should add the dependency information in module-info file of the new module

module easytext.gui{

requires easytext.analysis;

}

As the application also depends on javafx module, we should add dependency information about javafx module as well. So how to find the required javafx modules that our application is using?

We have got a command to see which modules are part of jdk

java --list-modules

The above command list all the modules that are present in jdk. Try it, you can see all javafx modules that starts with javafx. As we are using lot of controls in our application, lets dig deeper into the javafx.controls module and see which packages are available inside it.

java --list-modules javafx.controls

This gives a complete overview of what javafx.controls module requires and exports.

As you can see the last statements in above picture, we have conceals. conceals is used for encapsulated packages. Normally we do not uses in our module descriptor files. But we can see this for platform modules indicating that they are encapsulated classes.

So now we know which platform module is required in order to compile and run the application. And we should also export gui module to javafx graphics module because javafx uses reflection to launch the javafx application. So it should have proper access to the Main class of the gui module. But we should export only the gui module to graphics module. So thats the reason we use "to" to make gui module available only to the graohics module.

So lets add all this information to module-info file.

module easytext.gui{

exports javamodularity.easytext.gui to javafx.graphics;

requires javafx.controls;

requires easytext.analysis;

}

Compile and run the application and check.

javac --module-source-path src -d out $(find . -name *.java)

java --module-path out -m easytext.gui/javamodularity.easytext.gui.Main

Exposing and Consuming Services

One of the goals of creating modular apps is to make them extensible and so far it seems like we have done good job. Remember we had a easytext analysis module and two frondtend modules cli and gui modules, both reuse the same analysis module. Now a question we should ask ourself is, what happens if we add more analysis algorithms to the easytext application? How does the picture will look like then?

Lets say we have 4 different analysis algorithms easytext.analysis1, easytext.analysis2, easytext.analysis3, easytext.analysis4

And each of the frontend gui modules cli and gui require to use all the analysis modules. The picture already looks clumsy. If we add a new analysis module easytext.analysis5 then we need to update cli and gui modules with this new requirements. Clearly there is tight coupling between the frontend and analysis modules. If every frontend module needs to know about all the analysis implementation modules, it would service a red flag. Because in the end, from the perspective of front end modules we are not really interested in the implementation of analysis, we are just interested in finding out which analysis is there and being able to call them using unified interface.

What's wrong with the code?

The front end classes call the analyse method directly by instantiating the FleshchKincaid class. There is a tight coupling between frond end modules and analysis modules because frond end modules just want to call analyse method without knowing about the implemetation details. so here we are instantiating FleshchKincaid class and calling analyse method on it. This should not be the case. We should use interfaces here.So we need to create Analyzer interface and an implementation class KincaidAnalyzer. The picture looks like below when we use interface. Earlier the KincaidAnalyzer class is exported to the front end modules but now we export Analyzer interface to the front end modules. So the implementation details of the Analyzer class will be abtsracted from the front end modules.

Introducing Services

To solve the above problem, modular system provides Service Catalog/Registry. The idea is that some modules which are called as Service Provider modules or just provider modules can register their implementation class as a service in the service registry. This implementation class implements an interface, in this case MyServiceImpl implements MyService and this interface is the point of communication between service providers and service consumers. So on the right hand side we have more number of service provider modules providing implementations of interface and on the left hand side we have Service Consumers which make use of Service registry to get the object of the implementation class. So here Service Consumer no need to know about the implementation details, it gets the object of implementation class via the interface and make use of the methods.

So coming to the code, it looks like below

1) We have a module myApi and exports com.api package which contain MyService interface. This package can have many interfaces but we are trying with only one interface which is MyService. It contains only one method doWork.

2) If you look at module descriptor of consumer myConsumer which actually consumes the service, requires myApi which is logical because service interface MyService exists inside myApi package. And if you see the second statement, it says

uses com.api.MyService

It means that consumer wants to use or consume MyService interface by calling methods on it.

3) myProvider module requires myApi and provides implementation class MyServiceImpl for MyService interface.

4) Finally the question is how we obtain the services from myConsumer module? We use ServiceLoader to load the MyService class and inturn MyService class returns list of Services that implements MyService interface. We can iterate through the classes and find the appropriate class and call doWork method.

See the below picture to get clear idea about producers, consumers and Services. Both myConsumer and myProducer could able to read myApi package and have access to the interface but they does not have any tight coupling between them. While Provider provides the implementation class of the interface, Consumer uses ServiceLocator class to get access to the implementation class.

Linking Modules

Now we talk about linking modules. Personally i think this is one of the most interesting and exciting features that are made possible by new java9 module system. It enables the features that has not been available in java so far statically linking together code before runtime.

What does it mean statically linking the code together and why we want to do that?

-------------------------------------------------------------------------------------------------------

Traditionally we have two distinct phases in java development. First phase is compile time where compilation happens from java source code into byte code. For this we use java compiler which we have done repeatedly with our demo code. The compiled bytecode is then moved over to second phase the runtime of your code. This is the time where bytecode is interpreted and compiled down to native code to run your application. So compilation is first stage and running the code is second phase. Linking is in between these phases, a new optional step in java development where it takes outputs of compilation more specifically modules and link them together into more efficient formats for runtime. I want to stress the fact that linking is completly optional in java9 and it can only be performed when we have modules.

The goal of the linking is to create a so called "custom runtime image". The idea is that custom runtime image brings together the code from java runtime and your application to create a fully standalone image that can run the application. To acheive this it uses module description information from the modules in your application by resolving all the modules that are necessary for your application modules and includes platform modules (i.e., jdk platform modules) inorder to create a standalone custom runtime image.

Linking your modules into runtime image results in low footprint. On one had this means footprint on disk. So a custom runtime image will be smaller than the combined size of your application and jdk

On the other hand low footprints also translates into possible performance gains at runtime. The linking phase is unique in that regard, it is the one place where all code of application and java platform comes together and allows for the whole program optimizations. One example of whole program optimization is death code elimination because all code is known at linktime, the linker can see which code is used and which is not. The code that is not used can be removed so it does not have to be loaded at runtime. Currently only a few such optimizations are implemented in java linker. Overtime the number of optimizations will certainly grow.

jlink - Plugin used for linking modules

It is a command line tool which is used to create custom runtime images. jlink itself is a plugin based application. It means even after the release of java9, it is possible to create new plugins for jlink. For example impl new optimizations.

Example of new customer runtime image

----------------------------------------------------

Let us look an example of custom runtime image. The idea is that we are creating a runtime image containing all the modules that are needed to run the easytext.

We take two modules easytext.cli and easytext.analysis modules. We invoke jlink by pointing it out to cli module then jlink starts resolving and sees cli module needs analysis module so it will try to find it in module path and if its found it will be added to runtime image. If it does not found it will result in an error and we can not build an image. But resolving does not stop here. jlink also inspect the dependencies on platform modules. In this case it is really simple. Our modules cli and analysis only use java.base. so this will also be added to runtime image.

This translates into saving disk space and also it also saves startup time because at startup the jvm has to consider only 3 modules that are in the image rather than all the platform modules in the jdk.

See the below picture when we use jlink

We can contrast this runtime image with the situation where no jlink is used. In that case, we have full jdk which have all 90+ platform modules that are in java platform and our application modules will be put on module path and run on this top of FULL jdk .

See the below picture when we do not use jlink.

That's all about Java 9 Modularity system. Hope you had gone through great features of Java9.

Please post your comments below.

Happy Learning!!!

Comments

Post a Comment