Docker Part I - What is a Docker?

Hi, I am Malathi Boggavarapu and i am going to introduce basically what the Docker is about with examples and pictures. So let's get started now.

Java gives you WORA - Write one, Run anywhere. Docker gives PODA. Why WORA is possible is that we take java soruce code, compile to class file format or Jar fiel or war file and that format is understood by the JVM. As JVM is running on variety of different operating systems which understands the class file format and does what you want to do.

On the same lines, Docker give you PODA - Package once Deploy anywhere. You application is not just Java. It could be any language, could be any OS, could have whole variety of drivers and plugins and extensions and libraries those need to be packaged up, tuning configuration. So it says you take the entire application, package it to an image and that image would then run on variety of platforms.

Java gives WORA which is Write once and Run anywhere and Docker gives you PODA but its not true PODA because image created on Windows would run on Windows and image created on Linux would run on Linux. But it still gives pretty good flexibility and how package up your entire java application, uplevel yourself and say this is my source of truth and this is going to be deployed across a variety of platforms.

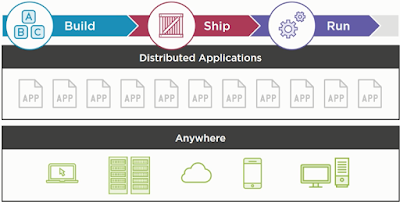

Now at a very high level, we can look that Docker mission is to Build applications, Ship applications and Run applications. They give you tools to build your applications, tools again for shipping applications across different operating systems and deploying, managing and scaling them is the Run part of it. These applications are truely Distributed applications because they could be run on the Cloud or in your data centre or in your laptop, Hybrid, it does not matter. As long as you have Docker engine running over there, it will understand the image format, it will run it and you are cool with that.

Typical question people ask is, i have been doing that with VM's number of years now.what does it really give me???

Well, lets take a look at the below picture then.

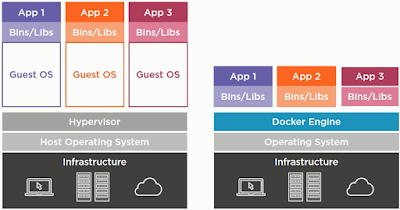

On the left side of the picture, you have infrastructure which is at the bottom such as Cloud, Data center or your laptop. On that data centre, you have host operating system running and on the host you run the Hypervisor and on top of that you run Guest OS. Basically Hypervisor is computer software, firmware or the hardware that runs virtual machines. Let's say you want to run your application on Ununtu and CentOS together, then you would install those two guest OS on the top of existing host OS. And then on each of the OS, you will bundle up your application including your application binaries and libraries. The problem over here is, you have the entire kernel space in host OS and the application space which we are not utilizing and on top of that you are putting another kernel space and another application space which is then required for your application.

So let's see how Docker works. You have the same infrastructure and instead of host operation system, you have an Operating System and on the Operating System the Docker engine will be run. Docker engine is the one that understands the Docker image. Image is where you bundle all of your application together and Docker engine says, you have the application, give it to me and i will run it. Docker requires certain linux technologies such as C groups, namespaces which gives you that control and flexibility to run the application and Docker engine basically simulates those functionalities on any machine or it may talk to the underlying Linux Operating System. But essentially your app only containing the userspace talk to docker engine and then talk to underlying Operating System where the kernel is sitting. So by breaking them apart into two different points, it really allows you higher density, allows better throughput and the way docker images are created will start up much faster. There are lot of benefits of using Docker as supposed to using the VM itself.

As a matter of fact, On a Mac Book if i run Virtual Box and on Virtual Box i could run 4 or 5 VM's and each VM has its own memory, disk space etc. And that's about it, then it comes to a crawl. But i can run hundreds of Docker containers and that allows me to break my application apart into multiple micro services and that makes more relevant.

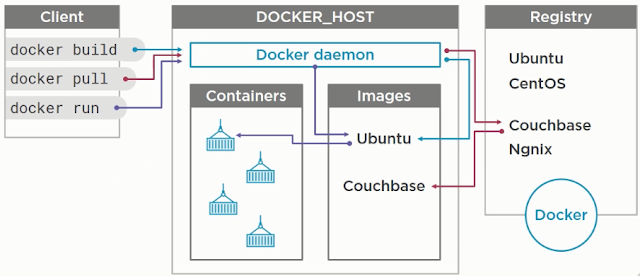

Registry is where anybody and everybody building an image can push it over there. So let's say, you are the leader of the Ubuntu community, you want people be able to run ubuntu image on Docker. So people build the image, push it to Docker hub then from my side i have ability to say, Client, Go talk to docker host and run this image. By default Docker host is configured to download image from Docker hub, so it downloads the image and run it on host itself.

Registry is sort of Maven central for all docker images. Because Maven central is java specific, you only put jars and wars over there. But here anybody and everybody can put an image. For example in above picture you can see, there is an Ubuntu image, Couchbase image and Ngnix image. Anybody can put an image over there and then you can say i want to download that image. The way the image is created, it will have certain dependencies. You don't have to really start from the base operating system image. People has created multi tier purpose images, for example

let's say, "You have an Ubuntu, install JDK on it and want to run app on it. We can create an image for that which says ubuntu-java".

Then you say, Download ubuntu-java but that ubuntu-java has a dependency on ubuntu so you download that base image as well automatically. So it will become very easy. So from user perspective, all you are saying is "Just run the image" and it will figure out the dependencies and download all of them.

Another part is that the client is Dumb client. There is no STATE on the client side. The client really talk to the Docker host by giving the command Docker Run, Docker pull, Docker build. Behind the scenes what's happening is Docker host has a rest endpoint which listens to these requests and when you say Docker build, under the wire it is sending Rest request over TCP which goes to the endpoint and then the host listen to the request and talks to the registry and does the right thing that it needs to do.

So that's all basically the definition of Docker. Please follow my blog for more Docker updates!! Don't forget to post your questions. Please make the blog interactive which makes our learning very exciting.

Happy Learning!

What is a Docker?

Docker is an open source project and a company. It started about 3 years ago in a France company called dotCloud where they wanted to build a better Paas (Platform as a Service) for developers. Over a period of time they recognized that it is going to be more on the lines of creating containers. It started as open source project in a company but over a period of time they figured out containers is the way to go and eventually evolved the name to Docker.Java gives you WORA - Write one, Run anywhere. Docker gives PODA. Why WORA is possible is that we take java soruce code, compile to class file format or Jar fiel or war file and that format is understood by the JVM. As JVM is running on variety of different operating systems which understands the class file format and does what you want to do.

On the same lines, Docker give you PODA - Package once Deploy anywhere. You application is not just Java. It could be any language, could be any OS, could have whole variety of drivers and plugins and extensions and libraries those need to be packaged up, tuning configuration. So it says you take the entire application, package it to an image and that image would then run on variety of platforms.

Java gives WORA which is Write once and Run anywhere and Docker gives you PODA but its not true PODA because image created on Windows would run on Windows and image created on Linux would run on Linux. But it still gives pretty good flexibility and how package up your entire java application, uplevel yourself and say this is my source of truth and this is going to be deployed across a variety of platforms.

Docker Mission, Infrastructure and workflow

Now at a very high level, we can look that Docker mission is to Build applications, Ship applications and Run applications. They give you tools to build your applications, tools again for shipping applications across different operating systems and deploying, managing and scaling them is the Run part of it. These applications are truely Distributed applications because they could be run on the Cloud or in your data centre or in your laptop, Hybrid, it does not matter. As long as you have Docker engine running over there, it will understand the image format, it will run it and you are cool with that.

Typical question people ask is, i have been doing that with VM's number of years now.what does it really give me???

Well, lets take a look at the below picture then.

On the left side of the picture, you have infrastructure which is at the bottom such as Cloud, Data center or your laptop. On that data centre, you have host operating system running and on the host you run the Hypervisor and on top of that you run Guest OS. Basically Hypervisor is computer software, firmware or the hardware that runs virtual machines. Let's say you want to run your application on Ununtu and CentOS together, then you would install those two guest OS on the top of existing host OS. And then on each of the OS, you will bundle up your application including your application binaries and libraries. The problem over here is, you have the entire kernel space in host OS and the application space which we are not utilizing and on top of that you are putting another kernel space and another application space which is then required for your application.

So let's see how Docker works. You have the same infrastructure and instead of host operation system, you have an Operating System and on the Operating System the Docker engine will be run. Docker engine is the one that understands the Docker image. Image is where you bundle all of your application together and Docker engine says, you have the application, give it to me and i will run it. Docker requires certain linux technologies such as C groups, namespaces which gives you that control and flexibility to run the application and Docker engine basically simulates those functionalities on any machine or it may talk to the underlying Linux Operating System. But essentially your app only containing the userspace talk to docker engine and then talk to underlying Operating System where the kernel is sitting. So by breaking them apart into two different points, it really allows you higher density, allows better throughput and the way docker images are created will start up much faster. There are lot of benefits of using Docker as supposed to using the VM itself.

As a matter of fact, On a Mac Book if i run Virtual Box and on Virtual Box i could run 4 or 5 VM's and each VM has its own memory, disk space etc. And that's about it, then it comes to a crawl. But i can run hundreds of Docker containers and that allows me to break my application apart into multiple micro services and that makes more relevant.

Docker Workflow

There are essentially 3 pieces to Docker. There is the Client which will talk to Docker, We can think of Docker host as Linux, Windows or Mac where your docker host is sitting which understands what an image is, what a container is and that is also the place where scaling and managing happens.Registry is where anybody and everybody building an image can push it over there. So let's say, you are the leader of the Ubuntu community, you want people be able to run ubuntu image on Docker. So people build the image, push it to Docker hub then from my side i have ability to say, Client, Go talk to docker host and run this image. By default Docker host is configured to download image from Docker hub, so it downloads the image and run it on host itself.

Registry is sort of Maven central for all docker images. Because Maven central is java specific, you only put jars and wars over there. But here anybody and everybody can put an image. For example in above picture you can see, there is an Ubuntu image, Couchbase image and Ngnix image. Anybody can put an image over there and then you can say i want to download that image. The way the image is created, it will have certain dependencies. You don't have to really start from the base operating system image. People has created multi tier purpose images, for example

let's say, "You have an Ubuntu, install JDK on it and want to run app on it. We can create an image for that which says ubuntu-java".

Then you say, Download ubuntu-java but that ubuntu-java has a dependency on ubuntu so you download that base image as well automatically. So it will become very easy. So from user perspective, all you are saying is "Just run the image" and it will figure out the dependencies and download all of them.

Another part is that the client is Dumb client. There is no STATE on the client side. The client really talk to the Docker host by giving the command Docker Run, Docker pull, Docker build. Behind the scenes what's happening is Docker host has a rest endpoint which listens to these requests and when you say Docker build, under the wire it is sending Rest request over TCP which goes to the endpoint and then the host listen to the request and talks to the registry and does the right thing that it needs to do.

So that's all basically the definition of Docker. Please follow my blog for more Docker updates!! Don't forget to post your questions. Please make the blog interactive which makes our learning very exciting.

Happy Learning!

Comments

Post a Comment